Written by

Margaret Jennings

Article

•

6 min

It might be simple - but I’m interested in building AI tools that people actually want to use. I’ve spent the last three months listening to today’s workforce in attempt to try and understand their relationship to AI.

Source: Nicola Bergamaschi for Vogue Poland (instagram, website)

We interviewed 20 managers - of customers, processes, and teams - across the United States. They work in non-technical industries including government, consulting, and insurance. They are “knowledge workers” or those whose job is predominately processing information, typically sitting at a desk. According to a recent Gartner report, knowledge workers are 71% of the US workforce in 2023, and according to a recent McKinsey report, Generative AI has the potential to increase their productivity by ~35-70% unlocking $6.1-7.9B in economic value.

Thanks for reading Margaret’s Substack! Subscribe for free to receive new posts and support my work.Subscribe

So what’d we learn by talking to the largest workforce in the world’s largest economy?

No matter the industry, today’s knowledge workers are looking for fulfillment. They want to complete their tasks on time, and to a high degree. They want to receive recognition from their colleagues, and get rewarded with higher salaries, and bigger opportunities. If we can build AI tools that help knowledge workers find new ways to do this, they’ll come onboard.

The issue is, knowledge workers don’t trust AI.

Here’s why:

It’s all been too fast, and too soon. Workers are overwhelmed by ChatGPT’s rampant rise and the narrative that their job can, and will, be replaced by this new technology. This looming fear of “Human v. Machine” lessens their enthusiasm when it comes to adopting an AI tool (and for good reason, for example OpenAI’s recent paper on automation argues that white collar jobs will be automated before blue collar jobs in “GPTs are GPTs: An early look at the labor market impact potential of large language models”).

They don’t know who is responsible for the output. Employees want to know who on their team did what. Using ChatGPT discreetly can appear like “cheating” in the workplace: workers worry that AI gives their colleagues skills that they haven’t earned and opportunities they haven’t worked for.

We aren’t actually used to objectivity. Knowledge workers are interested in the idea of an AI executive coach that can review all your meetings, emails, and material output to provide feedback as an objective third party. Those who are actually responsible for providing feedback to team members - aka people managers - find this feature to be disconcerting. People are still people. Managers don’t want to delegate feedback to an AI for fear of misdirection. Instead, they want to keep the humanity in management, whereby an individuals’ growth and a manager’s understanding can reflect information that may not be available to AI.

There’s still a big question mark around data security and privacy. Back in May, Apple, Spotify, Verizon, Goldman Sachs, Citigroup, and various other global enterprises banned or restricted their employees’ use of ChatGPT, citing privacy reasons. Today’s workforce is concerned about keeping their workplace data secure. They’re also worried about how their interaction data may be used, and if it could be utilized to train AI specifically to take away their job.

If we believe that AI will be the great equalizer of opportunities and skills with downstream effects of productivity gains, we need to build tools that knowledge workers trust. As a result, there is an emerging new feature set focused on user trust that is required for every AI product:

Control. People are not ready for magic. The focus instead should be: how do we enable the end user to steer AI to complete tasks that they don’t view as essential? Think of all the unseen work in your day - the repetitive, rote tasks - that no one will mention during a performance review. It’s important that we maintain a fine-line between the AI, and the user. The worker-in-the-loop should have full control over the tool’s processing and ultimately be accountable for the tool’s output to circumvent potential hallucinations or missteps.

Transparency. Workers want to know how an AI tool works, but they don’t need to become a prompt engineer or research scientist to understand every detail. They instead want to understand the basics at a high level - who owns the model? What data is the model using? Which model is best suited for which task? - so that they can avoid an ignorance-fueled anxiety. It’s important that they can see how the model “does its’ work” and provide explanations and interpretability where needed (for example, Bing builds trust and avoids potential missteps by responding with an answer and references).

Predictability. If an AI tool is going to be adopted by our workforce, the expectation should be that models are not 100% accurate, 100% of the time.

Unlike other SaaS applications or software that are focused on accuracy and precision, AI tools that are powered by LLMs and interact in natural language are useful collaborators for workers. Look at Midjourney, DALL-E, and TikTok - they broke the internet’s fourth wall whereby users are online to create - not just to distribute or organise information. We should establish AI tools as collaborators that unlock new superpowers and enable one to create things that were otherwise impossible, with the understanding that the tool - like any collaborator - can also be inaccurate or inconsistent.Security. Workers want to know where their data goes and who has access to the data when they use a LLM. They don’t want to get fired for using the wrong AI model and putting their company data at risk. It’s important for workers to see the AI tool as safe and secure as well as approved by upper management.

Control, Transparency, Predictability, and Security are the cornerstones for user adoption. Establishing user trust by defining the appropriate levers for user input to steer AI continues to be researched in the Human-Centered AI and Human-AI Collaboration academic fields.

As product builders, a great example to illustrate fully vs semi-autonomous AI within a process is by looking at how AI is being adopted in visual mediums. The following are two examples with divergent ideas of Human-AI collaboration.

The first example is a beer commercial made by an AI model. The prompt was to create a beer commercial.

This is the content that skeptics use to discredit AI. While from afar it might appear like a beer commercial, it’s a mess of disjointed visuals, all loosely related to the idea of beer. You can tell there was a lack of human input, as there’s a lack of narrative perspective or lasting message.

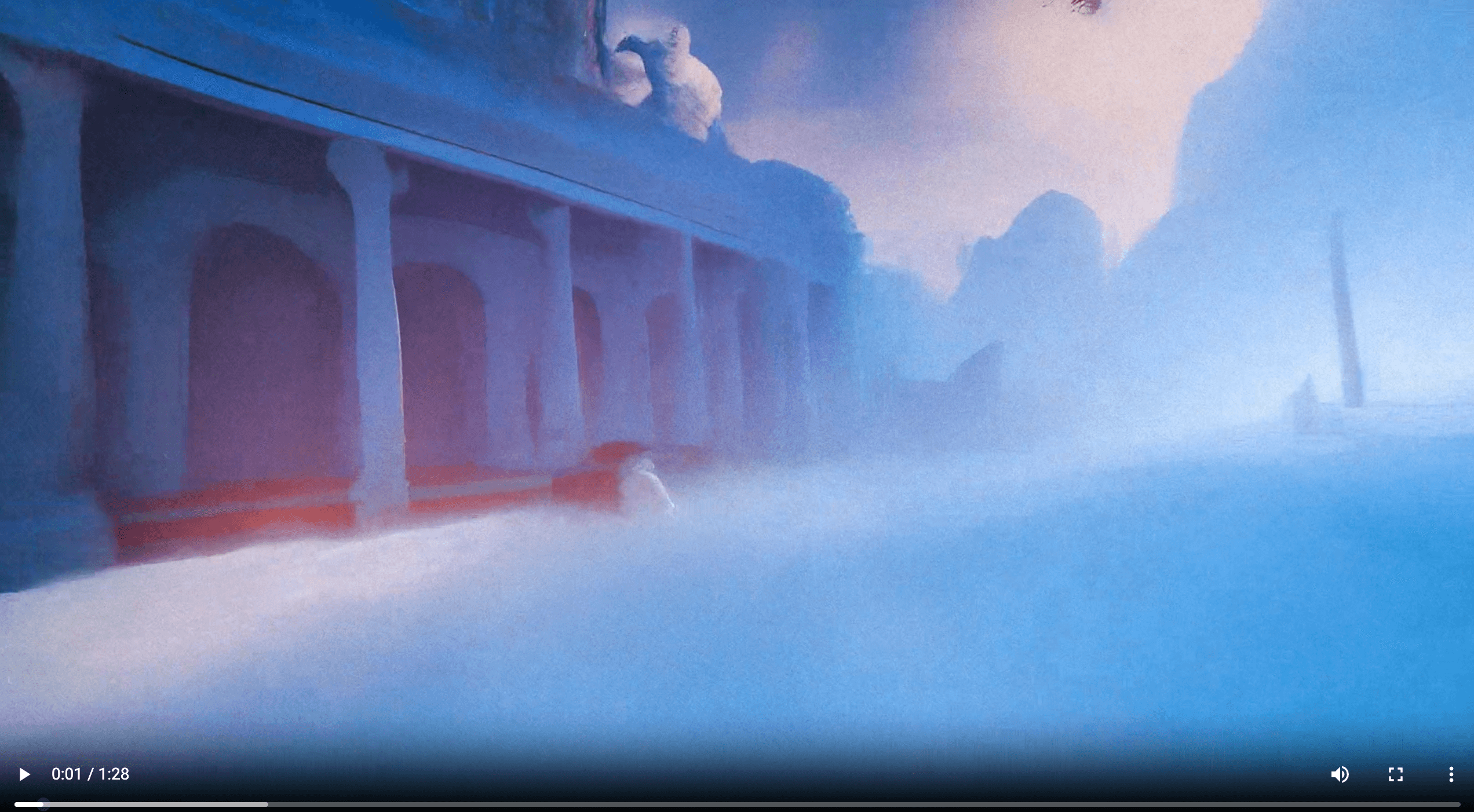

The second example is After Light, directed by Julien Vallee and Eve Duhamel. The video combines live action footage with Runway’s Gen-1, NeRFs, Generative AI and traditional post-processing.

Source: valleduhamel website

This film shows how AI can be incorporated into our existing workflows to generate a new media form. The film isn’t exactly a music video, or an animation short. It allows choreography to leap through far-off spaces, climates and figures. It is impossible to predict what comes next, bringing viewers into a new cinematic experience.

We can learn a lot about building AI products by analyzing After Light. If we’re going to build AI tools that last, we need to give our users both training wheels, and time. After Light does this. The video has moments that feel familiar, whether it’s the dancer’s choreography or the speeding cars, we know a human is directing the experience. We can appreciate the new worlds that AI is bringing us into, but we still feel like there’s a human in control.

Knowledge workers expect the same as artists. The goal should not be to replace their job with AI, but instead, to respect that a job of dignity requires a human to direct and manage its intellectual process. Knowledge workers have the most to gain by collaborating with LLMs, and the most to lose if implemented carelessly. My belief is that AI tools can raise the knowledge floor - emboldening a workforce’s dignity and ingenuity at work. After speaking with managers across the United States this summer, I believe it’s entirely possible to deliver AI with empathy towards greater collaboration, adoption, and success.